How to build privacy preserving web applications

October 28, 2017

6 min. read

Play4Privacy (P4P) was a good opportunity to challenge ourselves with building a web application which doesn’t compromise on privacy.

In this article, I will tell about this experience.

It’s about Trackers

The reason why privacy preserving websites are not the norm, but rather an exception nowadays, is: trackers.

Trackers are not only bad for privacy, but also for security and for performance (and/or battery life if you’re on a mobile device).

Since the definition of tracker is in the context of web applications is not very clear, here’s my proposal:

A tracker is a piece of software which has either the purpose or the side effect of leaking information about my online behaviour to 3rd parties without my knowledge and consent.

With this definition at hand, we can define various categories of trackers, most notably:

• advertising networks

• social media widgets

• analytics services

That’s how the P4P websites relate to those categories:

Ads:

P4P isn’t monetized by selling their visitors attention.

Social media:

There’s no social media widgets which automatically connect to social media services without a visitor’s explicit consent. This is an important detail:

p4p.lab10.coop and play.lab10.coop do include links to social media. Clicking those does cause the browser to connect to the linked 3rd party service and typically even tells that 3rd party where the visitor is coming from via the HTTP referer mechanism.

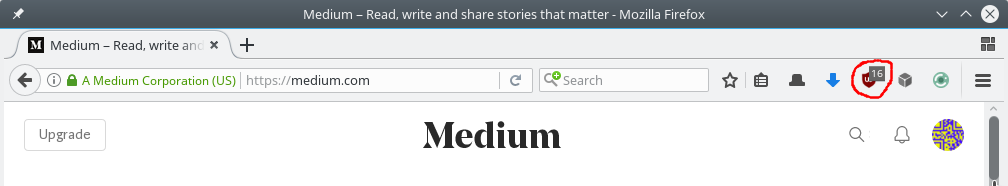

However what does not happen is that by just loading the site, a visitor browser already connects to a 3rd party service, letting them know who (IP address) visited what (URL) and when (timestamp). That is exactly what happens on websites which for example embed a Facebook like button.

To make that distinction very clear (because to this day I encounter even web developers not being aware of it): an embedded Facebook like button leaks that information even when the website visitor does not click the button.

If you create websites and want to have a deeper integration with social networks than just linking to your social media channels, there’s still ways to do this without compromising on privacy, for example with Shariff.

Analytics:

Here it gets interesting!

With analytics, tracking information about and behaviour of visitors is not a side effect, but the essence. Thus analytics must be bad, right?

Well, approach it with a metaphor:

You’re visiting somebody at their house. Obviously the person you’re visiting knows you are there and can make some observations like what you’re wearing and how you’re behaving.

What if that person would make notes about your visit in a personal diary? Would you consider that a breach of your privacy?

In contrast: What if that person had microphones and cameras installed which let some big corporation observe your visit? Just for the convenience of having somebody else write the diary?

In my opinion the difference between this 2 scenarios is so huge that it would deserve a very clear distinction in terminology.

Unfortunately, we lack that. It’s just analytics either way.

So, do we use analytics tools for the P4P websites?

Yes, we do.

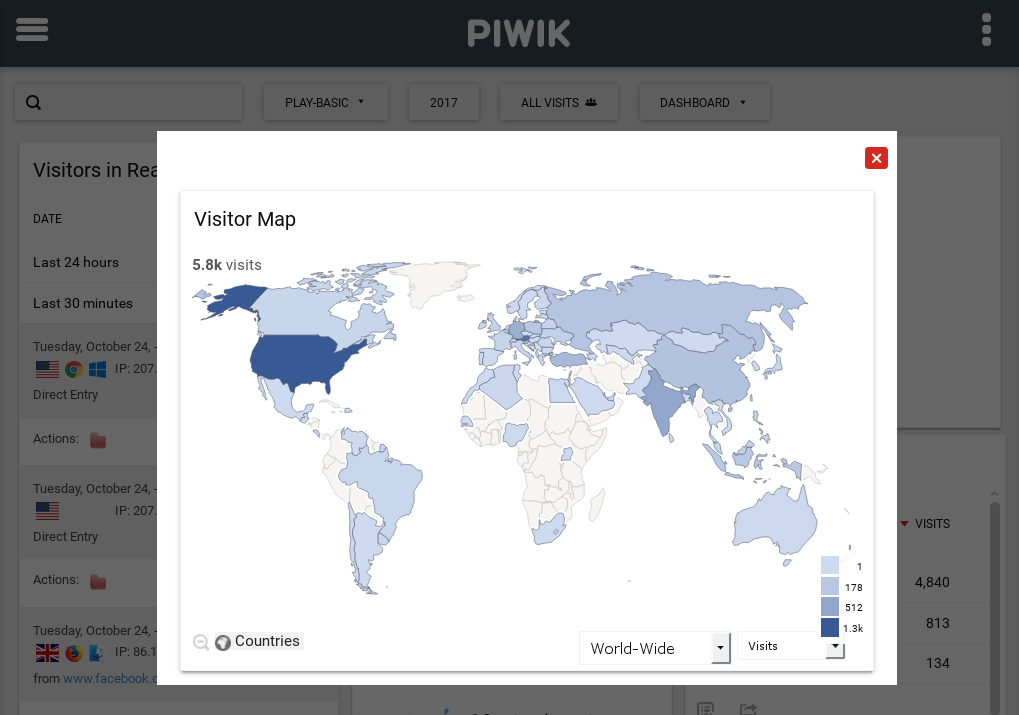

We have an instance of Piwik running on the same server which hosts the websites.

There’s a detail worth noting: we aren’t using Piwik the way it’s used by default: by adding a Javascript snippet to the websites. Instead we use log files generated by the webserver (in our case Nginx) as information source together with this script to import into Piwik.

We tried this solution because it somehow feels more natural. Since webserver logs (which correspond to the aforementioned personal diary) are created anyway (e.g. in order to be able to detect and diagnose problems), why not also use them for analytics instead of adding overhead to the client software?

Piwik is great if you want a powerful and configurable tool which can also be used by technically less literate people.

If you just need some nicely visualized stats, there exist even simpler tools, for example goaccess.

Invisible trackers

No, this is not about invisible images and similar tricks to hide trackers.

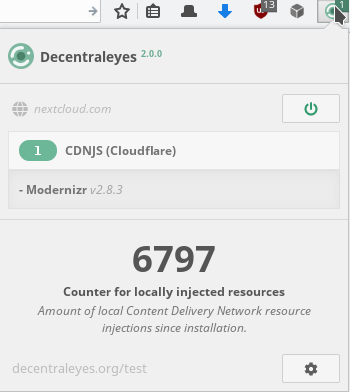

There’s a class of trackers which hides in plain sight, because it’s not (yet) perceived as trackers in general: Content Delivery Networks (CDNs).

The basic idea of CDNs makes a lot of sense: geographical distribution increases network resilience and can reduce overall traffic and latencies.

There’s however an important implication which is in my opinion underrated: having a web application load resources from a CDN leaks information to the CDN provider. The basic mechanism is very similar to the one described above for the Facebook like button. Technically, there’s often no difference: a resource is loaded from a server belonging to another domain than shown to the visitor in the browser address bar.

Now, you may assume that e.g. Google doesn’t exploit such information generated by websites embedding assets e.g. from Google Fonts. Maybe they’re just being nice.

Or maybe they figured out another service they can offer for no money, but in exchange of some more personal data to feed to their algorithms.

I don’t know.

Being in doubt, we decided to not leak visitor data to CDNs in the first place and spend the extra few minutes to also self-host all assets (including fonts).

CDNs are not per se bad. They are needed where a lot of data has to be moved around, e.g. for video streaming.

Typical web applications, P4P included, don’t really need that. The reason why many websites load their javascript, fonts and other assets from CDNs anyway is in my opinion mostly convenience (e.g. copy’n paste of sample code) and also half-myths about performance benefits.

Having a decent webserver, bundling assets often leads to better performance than using CDNs because of the fewer connections and DNS roundtrips (something we developers notoriously overlook when measuring performance of websites) needed (here’s a nice example for such an analysis). This is especially true when using HTTP/2.

Also, the benefits of shared caching are often smaller than imagined.

Related side note: Ironically, the rise of https has the side effect of making transparent caches (which, when used with SRI, would be an elegant alternative for many use cases) impossible, contributing to the rise of explicit CDN usage and hereby to a kind of centralization.

There’s however some hope for re-decentralization in the form of IPFS (see this experiment I conducted with it) and similar technologies.

Infrastructure

Is there anything else to consider?

What about DNS services and hosting providers?

After all, this are 3rd party services which are also at least theoretically able to track our visitor’s interaction with us. How can we trust them to not abuse that data?

Here our general policy is to make choices which don’t lead to more centralization.

We believe that ultimately centralization is what creates potential for abuse. This is especially true in the context of huge data collections and machine learning.

The choices we made are gandi.net for DNS (one of the not-so-many DNS services offering .coop-domains — besides, no bullshit is exactly what we want) and a solid dedicated server from Hetzner.

An important part of the infrastructure is beyond our control: the ISP a visitor goes through.

Recent developments in the US have shown that wherever there’s an opportunity to monetize personal data, there may be attempts to do so.

Self defense

In today’s web we’re unfortunately confronted with countless sites and applications which make low or no effort to protect our privacy.

But self defense is possible.

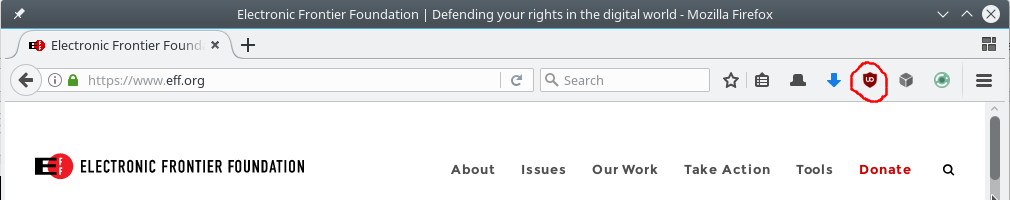

Most browsers support extensions which can block trackers. One such example is uBlock Origin which is open source (important for trustworthiness) and highly configurable.

With Brave there’s now also a browser with tracking protection as a built-in core feature.

Less known is that you can also block CDN requests without breaking websites: with the extension Decentraleyes which intercepts many CDN requests for common assets and serves those from harddisk instead.

If you want to delve deeper into the topic of tools for self defense and their effectiveness, I recommend this paper.

Finally:

I’m fully aware that just blocking trackers (especially ads) is not a scalable solution. The more widespread it gets, the more awareness and pushback it will cause as long as we lack alternative viable business models for the many services which are today powered by monetizing user attention and/or data.

We at lab10 collective are working hard on helping create such alternatives which follow the principle of Ethical Design. Because it’s important!